-->

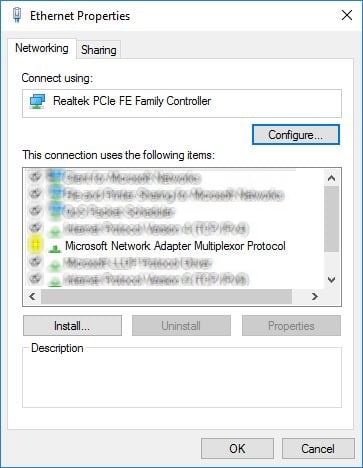

Microsoft Network Adapter Multiplexor Protocol: The Microsoft Network Adapter Multiplexor Protocol service is a kernel mode driver. If the Microsoft Network Adapter Multiplexor Protocol fails to start, the error is logged. Windows 8 startup proceeds, but a message box is displayed informing you that the NdisImPlatform service has failed to start. If you listen about kernel-mode or not I don’t know if not then I will share the details of kernel mood below also please must read Microsoft Network Adapter multiplexor The protocol is basically a kernel-mood driver that commonly used with Network Interface card bonding. NIC Teaming is one of the essences of the protocol. Microsoft Network Adapter Multiplexor Protocol should be left unticked. It is only used with NIC Teaming - i.e. Where you have two physical network interfaces that are being used in a load balancing or redundant configuration. The Microsoft Network Adapter Multiplexor Protocol service is a kernel mode driver. If Microsoft Network Adapter Multiplexor Protocol fails to start, the error is logged. Windows 10 startup proceeds, but a message box is displayed informing you that the NdisImPlatform service has failed to start.

Applies to: Windows Server (Semi-Annual Channel), Windows Server 2016

In this topic, we provide you with instructions to deploy Converged NIC in a Teamed NIC configuration with Switch Embedded Teaming (SET).

Can' T Enable Microsoft Network Adapter Multiplexor Protocol Download

Jun 21, 2006 Microsoft Network Adapter Multiplexor Protocol drivers were collected from official websites of manufacturers and other trusted sources. Official driver packages will help you to restore your Microsoft Network Adapter Multiplexor Protocol (network). Download latest drivers for Microsoft Network Adapter Multiplexor Protocol on Windows.

The example configuration in this topic describes two Hyper-V hosts, Hyper-V Host 1 and Hyper-V Host 2. Both hosts have two network adapters. On each host, one adapter is connected to the 192.168.1.x/24 subnet, and one adapter is connected to the 192.168.2.x/24 subnet.

- Oct 14, 2018 What is the Microsoft network adapter multiplexor protocol. It is basically a kernel-mode driver used for Network Interface Card (NIC) bonding. By default, the protocol is installed as part of the physical network adapter initialization. The one essence of this protocol is for NIC teaming.

- Microsoft does not support using this GUI or netcfg to uninstall protocols or built-in drivers. Instead, y ou can unbind the driver from Network Adapters either by using this GUI or the PowerShell cmdlet 'Disable-NetAdapterBinding.' This is effectively the same as uninstalling the driver.

- Aug 13, 2018 I've tried re-installing that protocol, but I'm getting a message to say it can't be found. I've looked around and took the advice to: Remove the Network adapters from Compmgmt and let Windows reinstall them (It does, just without that protocol) Remove the Network adapters and install using latest vendor drivers (Same as above).

- May 20, 2019 I am trying unsuccessfully to enable the Microsoft Network Adapter Multiplexor Protocol in my Ethernet Properties for Realtek Gaming GbE Family Controller. I need this to reduce the drop-offs I get with several ethernet connected radios I use. The prompts ask for the disk, which I do not have (or do not know where it is in my computer).

Step 1. Test the connectivity between source and destination

Ensure that the physical NIC can connect to the destination host. This test demonstrates connectivity by using Layer 3 (L3) - or the IP layer - as well as the Layer 2 (L2) virtual local area networks (VLAN).

View the first network adapter properties.

Results:

Name InterfaceDescription ifIndex Status MacAddress LinkSpeed Test-40G-1 Mellanox ConnectX-3 Pro Ethernet Adapter 11 Up E4-1D-2D-07-43-D0 40 Gbps View additional properties for the first adapter, including the IP address.

Results:

Parameter Value IPAddress 192.168.1.3 InterfaceIndex 11 InterfaceAlias Test-40G-1 AddressFamily IPv4 Type Unicast PrefixLength 24 View the second network adapter properties.

Results:

Name InterfaceDescription ifIndex Status MacAddress LinkSpeed TEST-40G-2 Mellanox ConnectX-3 Pro Ethernet A..#2 13 Up E4-1D-2D-07-40-70 40 Gbps View additional properties for the second adapter, including the IP address.

Results:

Parameter Value IPAddress 192.168.2.3 InterfaceIndex 13 InterfaceAlias TEST-40G-2 AddressFamily IPv4 Type Unicast PrefixLength 24 Verify that other NIC Team or SET member pNICs has a valid IP address.

Use a separate subnet, (xxx.xxx.2.xxx vs xxx.xxx.1.xxx), to facilitate sending from this adapter to the destination. Otherwise, if you locate both pNICs on the same subnet, the Windows TCP/IP stack load balances among the interfaces and simple validation becomes more complicated.

Step 2. Ensure that source and destination can communicate

In this step, we use the Test-NetConnection Windows PowerShell command, but if you can use the ping command if you prefer.

Verify bi-directional communication.

Results:

Parameter Value ComputerName 192.168.1.5 RemoteAddress 192.168.1.5 InterfaceAlias Test-40G-1 SourceAddress 192.168.1.3 PingSucceeded False PingReplyDetails (RTT) 0 ms In some cases, you might need to disable Windows Firewall with Advanced Security to successfully perform this test. If you disable the firewall, keep security in mind and ensure that your configuration meets your organization's security requirements.

Disable all firewall profiles.

After disabling the firewall profiles, test the connection again.

Results:

Parameter Value ComputerName 192.168.1.5 RemoteAddress 192.168.1.5 InterfaceAlias Test-40G-1 SourceAddress 192.168.1.3 PingSucceeded False PingReplyDetails (RTT) 0 ms Verify the connectivity for additional NICs. Repeat the previous steps for all subsequent pNICs included in the NIC or SET team.

Results:

Parameter Value ComputerName 192.168.2.5 RemoteAddress 192.168.2.5 InterfaceAlias Test-40G-2 SourceAddress 192.168.2.3 PingSucceeded False PingReplyDetails (RTT) 0 ms

Step 3. Configure the VLAN IDs for NICs installed in your Hyper-V hosts

Many network configurations make use of VLANs, and if you are planning to use VLANs in your network, you must repeat the previous test with VLANs configured.

For this step, the NICs are in ACCESS mode. However, when you create a Hyper-V Virtual Switch (vSwitch) later in this guide, the VLAN properties are applied at the vSwitch port level.

Because a switch can host multiple VLANs, it is necessary for the Top of Rack (ToR) physical switch to have the port that the host is connected to configured in Trunk mode.

Note

Consult your ToR switch documentation for instructions on how to configure Trunk mode on the switch.

Do I Need Multiplexor Protocol Windows 10

The following image shows two Hyper-V hosts with two network adapters each that have VLAN 101 and VLAN 102 configured in network adapter properties.

Tip

According to the Institute of Electrical and Electronics Engineers (IEEE) networking standards, the Quality of Service (QoS) properties in the physical NIC act on the 802.1p header that is embedded within the 802.1Q (VLAN) header when you configure the VLAN ID.

Configure the VLAN ID on the first NIC, Test-40G-1.

Results:

Name DisplayName DisplayValue RegistryKeyword RegistryValue TEST-40G-1 VLAN ID 101 VlanID {101} Restart the network adapter to apply the VLAN ID.

Ensure the Status is Up.

Results:

Name InterfaceDescription ifIndex Status MacAddress LinkSpeed Test-40G-1 Mellanox ConnectX-3 Pro Ethernet Ada.. 11 Up E4-1D-2D-07-43-D0 40 Gbps Configure the VLAN ID on the second NIC, Test-40G-2.

Results:

Name DisplayName DisplayValue RegistryKeyword RegistryValue TEST-40G-2 VLAN ID 102 VlanID {102} Restart the network adapter to apply the VLAN ID.

Ensure the Status is Up.

Results:

Name InterfaceDescription ifIndex Status MacAddress LinkSpeed Test-40G-2 Mellanox ConnectX-3 Pro Ethernet Ada.. 11 Up E4-1D-2D-07-43-D1 40 Gbps Important

It might take several seconds for the device to restart and become available on the network.

Verify the connectivity for the first NIC, Test-40G-1.

If connectivity fails, inspect the switch VLAN configuration or destination participation in the same VLAN.

Results:

Parameter Value ComputerName 192.168.1.5 RemoteAddress 192.168.1.5 InterfaceAlias Test-40G-1 SourceAddress 192.168.1.5 PingSucceeded True PingReplyDetails (RTT) 0 ms Verify the connectivity for the first NIC, Test-40G-2.

If connectivity fails, inspect the switch VLAN configuration or destination participation in the same VLAN.

Results:

Parameter Value ComputerName 192.168.2.5 RemoteAddress 192.168.2.5 InterfaceAlias Test-40G-2 SourceAddress 192.168.2.3 PingSucceeded True PingReplyDetails (RTT) 0 ms Important

It's not uncommon for a Test-NetConnection or ping failure to occur immediately after you perform Restart-NetAdapter. So wait for the network adapter to fully initialize, and then try again.

If the VLAN 101 connections work, but the VLAN 102 connections don't, the problem might be that the switch needs to be configured to allow port traffic on the desired VLAN. You can check for this by temporarily setting the failing adapters to VLAN 101, and repeating the connectivity test.

The following image shows your Hyper-V hosts after successfully configuring VLANs.

Step 4. Configure Quality of Service (QoS)

Note

You must perform all of the following DCB and QoS configuration steps on all hosts that are intended to communicate with each other.

Install Data Center Bridging (DCB) on each of your Hyper-V hosts.

- Optional for network configurations that use iWarp.

- Required for network configurations that use RoCE (any version) for RDMA services.

Results:

Success Restart Needed Exit Code Feature Result True No Success {Data Center Bridging} Set the QoS policies for SMB-Direct:

- Optional for network configurations that use iWarp.

- Required for network configurations that use RoCE (any version) for RDMA services.

In the example command below, the value “3” is arbitrary. You can use any value between 1 and 7 as long as you consistently use the same value throughout the configuration of QoS policies.

Results:

Parameter Value Name SMB Owner Group Policy (Machine) NetworkProfile All Precedence 127 JobObject NetDirectPort 445 PriorityValue 3 Set additional QoS policies for other traffic on the interface.

Results:

Parameter Value Name DEFAULT Owner Group Policy (Machine) NetworkProfile All Precedence 127 Template Default JobObject PriorityValue 0 Turn on Priority Flow Control for SMB traffic, which is not required for iWarp.

Results:

Priority Enabled PolicySet IfIndex IfAlias 0 False Global 1 False Global 2 False Global 3 True Global 4 False Global 5 False Global 6 False Global 7 False Global IMPORTANTIf your results do not match these results because items other than 3 have an Enabled value of True, you must disable FlowControl for these classes.

Under more complex configurations, the other traffic classes might require flow control, however these scenarios are outside the scope of this guide.

Enable QoS for the first NIC, Test-40G-1.

Capabilities:

Parameter Hardware Current MacSecBypass NotSupported NotSupported DcbxSupport None None NumTCs(Max/ETS/PFC) 8/8/8 8/8/8 OperationalTrafficClasses:

TC TSA Bandwidth Priorities 0 Strict 0-7 OperationalFlowControl:

Priority 3 Enabled

OperationalClassifications:

Protocol Port/Type Priority Default 0 NetDirect 445 3 Enable QoS for the second NIC, Test-40G-2.

Capabilities:

Parameter Hardware Current MacSecBypass NotSupported NotSupported DcbxSupport None None NumTCs(Max/ETS/PFC) 8/8/8 8/8/8 OperationalTrafficClasses:

TC TSA Bandwidth Priorities 0 Strict 0-7 OperationalFlowControl:

Priority 3 Enabled

OperationalClassifications:

Protocol Port/Type Priority Default 0 NetDirect 445 3 Reserve half the bandwidth to SMB Direct (RDMA)

Results:

Name Algorithm Bandwidth(%) Priority PolicySet IfIndex IfAlias SMB ETS 50 3 Global View the bandwidth reservation settings:

Results:

Name Algorithm Bandwidth(%) Priority PolicySet IfIndex IfAlias [Default] ETS 50 0-2,4-7 Global SMB ETS 50 3 Global (Optional) Create two additional traffic classes for tenant IP traffic.

Results:

Name Algorithm Bandwidth(%) Priority PolicySet IfIndex IfAlias IP1 ETS 10 1 Global Results:

Name Algorithm Bandwidth(%) Priority PolicySet IfIndex IfAlias IP2 ETS 10 2 Global View the QoS traffic classes.

Results:

Name Algorithm Bandwidth(%) Priority PolicySet IfIndex IfAlias [Default] ETS 30 0,4-7 Global SMB ETS 50 3 Global IP1 ETS 10 1 Global IP2 ETS 10 2 Global (Optional) Override the debugger.

By default, the attached debugger blocks NetQos.

Results:

Step 5. Verify the RDMA configuration (Mode 1)

You want to ensure that the fabric is configured correctly prior to creating a vSwitch and transitioning to RDMA (Mode 2).

The following image shows the current state of the Hyper-V hosts.

Verify the RDMA configuration.

Results:

Name InterfaceDescription Enabled TEST-40G-1 Mellanox ConnectX-4 VPI Adapter #2 True TEST-40G-2 Mellanox ConnectX-4 VPI Adapter True Determine the ifIndex value of your target adapters.

You use this value in subsequent steps when you run the script you download.

Results:

InterfaceAlias InterfaceIndex IPv4Address TEST-40G-1 14 {192.168.1.3} TEST-40G-2 13 {192.168.2.3} Download the DiskSpd.exe utility and extract it into C:TEST.

Download the Test-RDMA PowerShell script to a test folder on your local drive, for example, C:TEST.

Run the Test-Rdma.ps1 PowerShell script to pass the ifIndex value to the script, along with the IP address of the first remote adapter on the same VLAN.

In this example, the script passes the ifIndex value of 14 on the remote network adapter IP address 192.168.1.5.

Results:

Note

If the RDMA traffic fails, for the RoCE case specifically, consult your ToR Switch configuration for proper PFC/ETS settings that should match the Host settings. Refer to the QoS section in this document for reference values.

Run the Test-Rdma.ps1 PowerShell script to pass the ifIndex value to the script, along with the IP address of the second remote adapter on the same VLAN.

In this example, the script passes the ifIndex value of 13 on the remote network adapter IP address 192.168.2.5.

Results:

Step 6. Create a Hyper-V vSwitch on your Hyper-V hosts

The following image shows Hyper-V Host 1 with a vSwitch.

Create a vSwitch in SET mode on Hyper-V host 1.

Result:

Name SwitchType NetAdapterInterfaceDescription VMSTEST External Teamed-Interface View the physical adapter team in SET.

Results:

Display two views of the host vNIC

Results:

Name InterfaceDescription ifIndex Status MacAddress LinkSpeed vEthernet (VMSTEST) Hyper-V Virtual Ethernet Adapter #2 28 Up E4-1D-2D-07-40-71 80 Gbps View additional properties of the host vNIC.

Results:

Name IsManagementOs VMName SwitchName MacAddress Status IPAddresses VMSTEST True VMSTEST E41D2D074071 {Ok} Test the network connection to the remote VLAN 101 adapter.

Results:

Step 7. Remove the Access VLAN setting

In this step, you remove the ACCESS VLAN setting from the physical NIC and to set the VLANID using the vSwitch.

You must remove the ACCESS VLAN setting to prevent both auto-tagging the egress traffic with the incorrect VLAN ID and from filtering ingress traffic which doesn't match the ACCESS VLAN ID.

Remove the setting.

Set the VLAN ID.

Results:

Test the network connection.

Results:

IMPORTANT If your results are not similar to the example results and ping fails with the message 'WARNING: Ping to 192.168.1.5 failed -- Status: DestinationHostUnreachable,' confirm that the “vEthernet (VMSTEST)” has the proper IP address.

If the IP address is not set, correct the issue.

Rename the Management NIC.

Results:

Name IsManagementOs VMName SwitchName MacAddress Status IPAddresses CORP-External-Switch True CORP-External-Switch 001B785768AA {Ok} MGT True VMSTEST E41D2D074071 {Ok} View additional NIC properties.

Results:

Name InterfaceDescription ifIndex Status MacAddress LinkSpeed vEthernet (MGT) Hyper-V Virtual Ethernet Adapter #2 28 Up E4-1D-2D-07-40-71 80 Gbps

Step 8. Test Hyper-V vSwitch RDMA

The following image shows the current state of your Hyper-V hosts, including the vSwitch on Hyper-V Host 1.

Set the priority tagging on the Host vNIC to complement the previous VLAN settings.

Results:

Name : MGTIeeePriorityTag : On

Create two host vNICs for RDMA and connect them to the vSwitch VMSTEST.

View the Management NIC properties.

Results:

Name IsManagementOs VMName SwitchName MacAddress Status IPAddresses CORP-External-Switch True CORP-External-Switch 001B785768AA {Ok} Mgt True VMSTEST E41D2D074071 {Ok} SMB1 True VMSTEST 00155D30AA00 {Ok} SMB2 True VMSTEST 00155D30AA01 {Ok}

Step 9. Assign an IP address to the SMB Host vNICs vEthernet (SMB1) and vEthernet (SMB2)

The TEST-40G-1 and TEST-40G-2 physical adapters still have an ACCESS VLAN of 101 and 102 configured. Because of this, the adapters tag the traffic - and ping succeeds. Previously, you configured both pNIC VLAN IDs to zero, then set the VMSTEST vSwitch to VLAN 101. After that, you were still able to ping the remote VLAN 101 adapter by using the MGT vNIC, but there are currently no VLAN 102 members.

Remove the ACCESS VLAN setting from the physical NIC to prevent it from both auto-tagging the egress traffic with the incorrect VLAN ID and to prevent it from filtering ingress traffic that doesn't match the ACCESS VLAN ID.

Results:

Test the remote VLAN 102 adapter.

Results:

Add a new IP address for interface vEthernet (SMB2).

Results:

Test the connection again.

Place the RDMA Host vNICs on the pre-existing VLAN 102.

Results:

Inspect the mapping of SMB1 and SMB2 to the underlying physical NICs under the vSwitch SET Team.

The association of Host vNIC to Physical NICs is random and subject to rebalancing during creation and destruction. In this circumstance, you can use an indirect mechanism to check the current association. The MAC addresses of SMB1 and SMB2 are associated with the NIC Team member TEST-40G-2. This is not ideal because Test-40G-1 does not have an associated SMB Host vNIC, and will not allow for utilization of RDMA traffic over the link until an SMB Host vNIC is mapped to it.

Results:

View the VM network adapter properties.

Results:

View the network adapter team mapping.

The results should not return information because you have not yet performed mapping.

Map SMB1 and SMB2 to separate physical NIC team members, and to view the results of your actions.

Important

Ensure that you complete this step before proceeding, or your implementation fails.

Results:

Confirm the MAC associations created previously.

Results:

Test the connection from the remote system because both Host vNICs reside on the same subnet and have the same VLAN ID (102).

Results:

Results:

Set the name, switch name, and priority tags.

Results:

View the vEthernet network adapter properties.

Results:

Enable the vEthernet network adapters.

Results:

Step 10. Validate the RDMA functionality

You want to validate the RDMA functionality from the remote system to the local system, which has a vSwitch, to both members of the vSwitch SET team.

Because both Host vNICs (SMB1 and SMB2) are assigned to VLAN 102, you can select the VLAN 102 adapter on the remote system.

In this example, the NIC Test-40G-2 does RDMA to SMB1 (192.168.2.111) and SMB2 (192.168.2.222).

Tip

You might need to disable the Firewall on this system. Consult your fabric policy for details.

Results:

View the network adapter properties.

Results:

View the network adapter RDMA information.

Results:

Perform the RDMA traffic test on the first physical adapter.

Results:

Perform the RDMA traffic test on the second physical adapter.

Results:

Test for RDMA traffic from the local to the remote computer.

Results:

Perform the RDMA traffic test on the first virtual adapter.

Results:

Perform the RDMA traffic test on the second virtual adapter.

Results:

The final line in this output, 'RDMA traffic test SUCCESSFUL: RDMA traffic was sent to 192.168.2.5,' shows that you have successfully configured Converged NIC on your adapter.

Related topics

-->The Remote Desktop Protocol (RDP) connection to your Windows-based Azure virtual machine (VM) can fail for various reasons, leaving you unable to access your VM. The issue can be with the Remote Desktop service on the VM, the network connection, or the Remote Desktop client on your host computer. This article guides you through some of the most common methods to resolve RDP connection issues.

If you need more help at any point in this article, you can contact the Azure experts on the MSDN Azure and Stack Overflow forums. Alternatively, you can file an Azure support incident. Go to the Azure support site and select Get Support.

Quick troubleshooting steps

After each troubleshooting step, try reconnecting to the VM:

- Reset Remote Desktop configuration.

- Check Network Security Group rules / Cloud Services endpoints.

- Review VM console logs.

- Reset the NIC for the VM.

- Check the VM Resource Health.

- Reset your VM password.

- Restart your VM.

- Redeploy your VM.

Continue reading if you need more detailed steps and explanations. Verify that local network equipment such as routers and firewalls are not blocking outbound TCP port 3389, as noted in detailed RDP troubleshooting scenarios.

Tip

If the Connect button for your VM is grayed out in the portal and you are not connected to Azure via an Express Route or Site-to-Site VPN connection, you need to create and assign your VM a public IP address before you can use RDP. You can read more about public IP addresses in Azure.

Ways to troubleshoot RDP issues

You can troubleshoot VMs created using the Resource Manager deployment model by using one of the following methods:

- Azure portal - great if you need to quickly reset the RDP configuration or user credentials and you don't have the Azure tools installed.

- Azure PowerShell - if you are comfortable with a PowerShell prompt, quickly reset the RDP configuration or user credentials using the Azure PowerShell cmdlets.

You can also find steps on troubleshooting VMs created using the Classic deployment model.

Troubleshoot using the Azure portal

After each troubleshooting step, try connecting to your VM again. If you still cannot connect, try the next step.

Reset your RDP connection. This troubleshooting step resets the RDP configuration when Remote Connections are disabled or Windows Firewall rules are blocking RDP, for example.

Select your VM in the Azure portal. Scroll down the settings pane to the Support + Troubleshooting section near bottom of the list. Click the Reset password button. Set the Mode to Reset configuration only and then click the Update button:

Verify Network Security Group rules. Use IP flow verify to confirm if a rule in a Network Security Group is blocking traffic to or from a virtual machine. You can also review effective security group rules to ensure inbound 'Allow' NSG rule exists and is prioritized for RDP port(default 3389). For more information, see Using Effective Security Rules to troubleshoot VM traffic flow.

Review VM boot diagnostics. This troubleshooting step reviews the VM console logs to determine if the VM is reporting an issue. Not all VMs have boot diagnostics enabled, so this troubleshooting step may be optional.

Specific troubleshooting steps are beyond the scope of this article, but may indicate a wider problem that is affecting RDP connectivity. For more information on reviewing the console logs and VM screenshot, see Boot Diagnostics for VMs.

Reset the NIC for the VM. For more information, see how to reset NIC for Azure Windows VM.

Check the VM Resource Health. This troubleshooting step verifies there are no known issues with the Azure platform that may impact connectivity to the VM.

Select your VM in the Azure portal. Scroll down the settings pane to the Support + Troubleshooting section near bottom of the list. Click the Resource health button. A healthy VM reports as being Available:

Reset user credentials. This troubleshooting step resets the password on a local administrator account when you are unsure or have forgotten the credentials. Once you have logged into the VM, you should reset the password for that user.

Select your VM in the Azure portal. Scroll down the settings pane to the Support + Troubleshooting section near bottom of the list. Click the Reset password button. Make sure the Mode is set to Reset password and then enter your username and a new password. Finally, click the Update button:

Restart your VM. This troubleshooting step can correct any underlying issues the VM itself is having.

Select your VM in the Azure portal and click the Overview tab. Click the Restart button:

Redeploy your VM. This troubleshooting step redeploys your VM to another host within Azure to correct any underlying platform or networking issues.

Select your VM in the Azure portal. Scroll down the settings pane to the Support + Troubleshooting section near bottom of the list. Click the Redeploy button, and then click Redeploy:

After this operation finishes, ephemeral disk data is lost and dynamic IP addresses that are associated with the VM are updated.

Verify routing. Use Network Watcher's Next hop capability to confirm that a route isn't preventing traffic from being routed to or from a virtual machine. You can also review effective routes to see all effective routes for a network interface. For more information, see Using effective routes to troubleshoot VM traffic flow.

Ensure that any on-premises firewall, or firewall on your computer, allows outbound TCP 3389 traffic to Azure.

If you are still encountering RDP issues, you can open a support request or read more detailed RDP troubleshooting concepts and steps.

Troubleshoot using Azure PowerShell

If you haven't already, install and configure the latest Azure PowerShell.

The following examples use variables such as myResourceGroup, myVM, and myVMAccessExtension. Replace these variable names and locations with your own values.

Multiplexor Protocol Windows 10 Download

Note

You reset the user credentials and the RDP configuration by using the Set-AzVMAccessExtension PowerShell cmdlet. In the following examples, myVMAccessExtension is a name that you specify as part of the process. If you have previously worked with the VMAccessAgent, you can get the name of the existing extension by using Get-AzVM -ResourceGroupName 'myResourceGroup' -Name 'myVM' to check the properties of the VM. To view the name, look under the 'Extensions' section of the output.

After each troubleshooting step, try connecting to your VM again. If you still cannot connect, try the next step.

Reset your RDP connection. This troubleshooting step resets the RDP configuration when Remote Connections are disabled or Windows Firewall rules are blocking RDP, for example.

The follow example resets the RDP connection on a VM named

myVMin theWestUSlocation and in the resource group namedmyResourceGroup:Verify Network Security Group rules. This troubleshooting step verifies that you have a rule in your Network Security Group to permit RDP traffic. The default port for RDP is TCP port 3389. A rule to permit RDP traffic may not be created automatically when you create your VM.

First, assign all the configuration data for your Network Security Group to the

$rulesvariable. The following example obtains information about the Network Security Group namedmyNetworkSecurityGroupin the resource group namedmyResourceGroup:Now, view the rules that are configured for this Network Security Group. Verify that a rule exists to allow TCP port 3389 for inbound connections as follows:

The following example shows a valid security rule that permits RDP traffic. You can see

Protocol,DestinationPortRange,Access, andDirectionare configured correctly:If you do not have a rule that allows RDP traffic, create a Network Security Group rule. Allow TCP port 3389.

Reset user credentials. This troubleshooting step resets the password on the local administrator account that you specify when you are unsure of, or have forgotten, the credentials.

First, specify the username and a new password by assigning credentials to the

$credvariable as follows:Now, update the credentials on your VM. The following example updates the credentials on a VM named

myVMin theWestUSlocation and in the resource group namedmyResourceGroup:Restart your VM. This troubleshooting step can correct any underlying issues the VM itself is having.

The following example restarts the VM named

myVMin the resource group namedmyResourceGroup:Redeploy your VM. This troubleshooting step redeploys your VM to another host within Azure to correct any underlying platform or networking issues.

The following example redeploys the VM named

myVMin theWestUSlocation and in the resource group namedmyResourceGroup:Verify routing. Use Network Watcher's Next hop capability to confirm that a route isn't preventing traffic from being routed to or from a virtual machine. You can also review effective routes to see all effective routes for a network interface. For more information, see Using effective routes to troubleshoot VM traffic flow.

Ensure that any on-premises firewall, or firewall on your computer, allows outbound TCP 3389 traffic to Azure.

If you are still encountering RDP issues, you can open a support request or read more detailed RDP troubleshooting concepts and steps.

Can 26%2339 3bt Enable Microsoft Network Adapter Multiplexor Protocol Windows 10

Troubleshoot VMs created using the Classic deployment model

Important

Classic VMs will be retired on March 1, 2023.

If you use IaaS resources from ASM, please complete your migration by March 1, 2023. We encourage you to make the switch sooner to take advantage of the many feature enhancements in Azure Resource Manager.

For more information, see Migrate your IaaS resources to Azure Resource Manager by March 1, 2023.

After each troubleshooting step, try reconnecting to the VM.

Reset your RDP connection. This troubleshooting step resets the RDP configuration when Remote Connections are disabled or Windows Firewall rules are blocking RDP, for example.

Select your VM in the Azure portal. Click the ..More button, then click Reset Remote Access:

Verify Cloud Services endpoints. This troubleshooting step verifies that you have endpoints in your Cloud Services to permit RDP traffic. The default port for RDP is TCP port 3389. A rule to permit RDP traffic may not be created automatically when you create your VM.

Select your VM in the Azure portal. Click the Endpoints button to view the endpoints currently configured for your VM. Verify that endpoints exist that allow RDP traffic on TCP port 3389.

The following example shows valid endpoints that permit RDP traffic:

If you do not have an endpoint that allows RDP traffic, create a Cloud Services endpoint. Allow TCP to private port 3389.

Review VM boot diagnostics. This troubleshooting step reviews the VM console logs to determine if the VM is reporting an issue. Not all VMs have boot diagnostics enabled, so this troubleshooting step may be optional.

Specific troubleshooting steps are beyond the scope of this article, but may indicate a wider problem that is affecting RDP connectivity. For more information on reviewing the console logs and VM screenshot, see Boot Diagnostics for VMs.

Check the VM Resource Health. This troubleshooting step verifies there are no known issues with the Azure platform that may impact connectivity to the VM.

Select your VM in the Azure portal. Scroll down the settings pane to the Support + Troubleshooting section near bottom of the list. Click the Resource Health button. A healthy VM reports as being Available:

Reset user credentials. This troubleshooting step resets the password on the local administrator account that you specify when you are unsure or have forgotten the credentials. Once you have logged into the VM, you should reset the password for that user.

Select your VM in the Azure portal. Scroll down the settings pane to the Support + Troubleshooting section near bottom of the list. Click the Reset password button. Enter your username and a new password. Finally, click the Save button:

Restart your VM. This troubleshooting step can correct any underlying issues the VM itself is having.

Select your VM in the Azure portal and click the Overview tab. Click the Restart button:

Ensure that any on-premises firewall, or firewall on your computer, allows outbound TCP 3389 traffic to Azure.

If you are still encountering RDP issues, you can open a support request or read more detailed RDP troubleshooting concepts and steps.

Can' T Enable Microsoft Network Adapter Multiplexor Protocol For Mac

Troubleshoot specific RDP errors

You may encounter a specific error message when trying to connect to your VM via RDP. The following are the most common error messages:

Microsoft Network Adapter Multiplexor Protocol Configuration

- The remote session was disconnected because there are no Remote Desktop License Servers available to provide a license.

- Remote Desktop can't find the computer 'name'.

- An authentication error has occurred. The Local Security Authority cannot be contacted.

- Windows Security error: Your credentials did not work.

- This computer can't connect to the remote computer.

Can' T Enable Microsoft Network Adapter Multiplexor Protocol Number

Configure Microsoft Network Adapter Multiplexor Protocol

Additional resources

If none of these errors occurred and you still can't connect to the VM via Remote Desktop, read the detailed troubleshooting guide for Remote Desktop.

Can 26%2339 3bt Enable Microsoft Network Adapter Multiplexor Protocol Free

- For troubleshooting steps in accessing applications running on a VM, see Troubleshoot access to an application running on an Azure VM.

- If you are having issues using Secure Shell (SSH) to connect to a Linux VM in Azure, see Troubleshoot SSH connections to a Linux VM in Azure.